Ok, I admit, this is a bit of a clickbait/SEO title as I'm using an ODROID HC1, not a Pi, but the same principle applies. The point is, I'm trying to avoid transcoding the video on the server. While my 10-watt 8-core "little engine that could" may actually be able transcode the video, it has to do other things too. It's primarily limited on memory right now but I've been trying to keep things as quiet as possible on there & leave room for more processes in the future.

EDIT: I have been informed that as of 0.0.7 Owncast now supports hardware-accelerated video encoding on the Pi, as long as it is using a 32-bit OS. So if you are interesting in using Owncast with a Pi, you should know that what I did is definitely not required, it was more of an experiment or "for fun" thing.

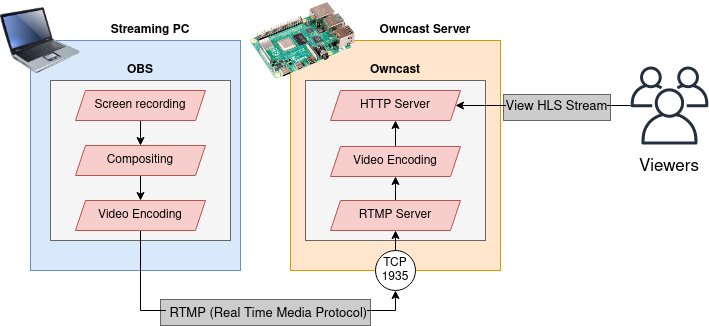

If you aren't familiar with Owncast, it's a great new single-tenant web server application designed to provide an alternative to video streaming platforms like youtube and twitch. With owncast, the streamer owns and operates thier own server, freeing them from many problems and restrictions associated with the big name platforms.

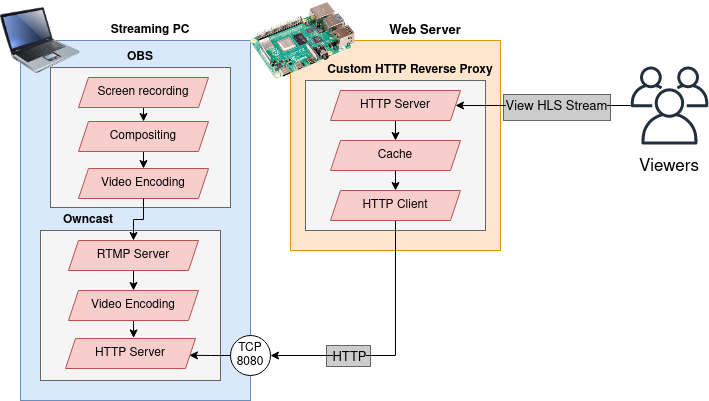

In part 1, created a custom HTTP caching reverse-proxy server so I could run owncast on my workstation, not on my server. When visitors came to my site to view the stream, thier requests would hit the proxy server first, which would then forward to the owncast instance running on my much-more-powerful workstation. This worked ok, but not great. I should have used a more standard HTTP server like nginx rather than rolling my own, however. The stream page would often appear broken when my workstation went offline due to the nuances in how I cached the content versus how different browsers would request it.

It also put a lot of strain on my workstation, because it had to record the screen, do all the compositing, and encode the video not once, but twice or three times, once for the RTMP connection to owncast and once for each quality level owncast was configured with.

The way I was running owncast was weird too. I had built my own docker image for it by overlaying my customizations on top of the official image. I knew I eventually wanted to stop doing this & build my own image from the owncast source code, but it was easy to procrastinate.

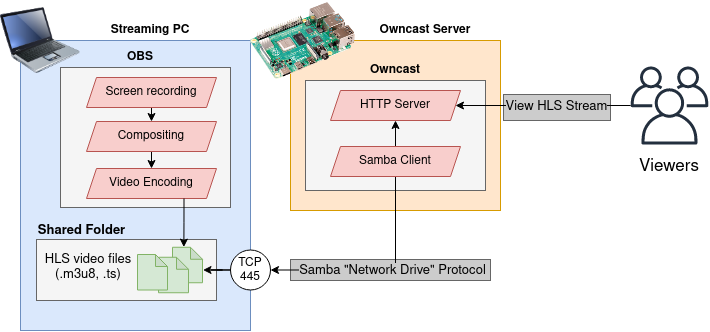

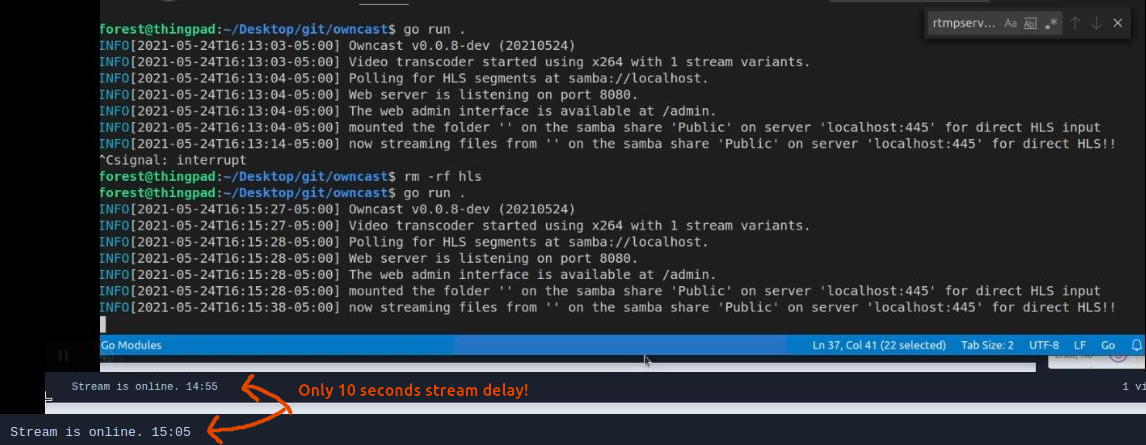

Then, one day I came across an interestning tidbit while I was researching ways to improve my stream. Apparently OBS (Open Broadcaster Software) can output video files formatted for HLS (HTTP Live Streaming), the exact format owncast serves out to viewers. This interested me, I figured if OBS could output the files already formatted for consumption by viewers it might solve a lot of my problems at once. My workstation / streaming PC would only have to encode the video once & the owncast process could be moved out to the actual web server for convenience, eliminating the need for the buggy caching proxy. (Also, as I would find out later, this would decrease the stream delay considerably).

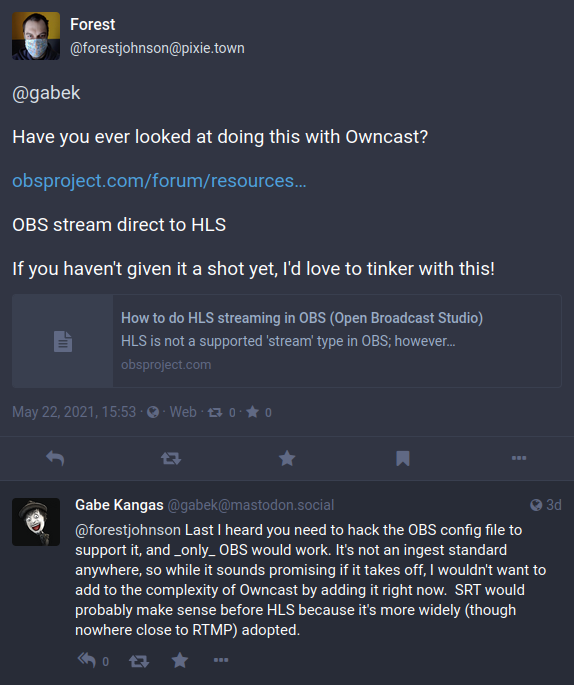

I asked Gabe, the developer of Owncast about this and he said it wasn't anywhere near his plans for owncast in the future, so I felt better about trying to build it myself — I wouldn't be duplicating work.

So I went ahead and forked Owncast, then started moving my customizations over to my fork. Now that I was modifying the source code rather than overlaying HTML and CSS files on top of the existing docker image, I could also add proper support for a couple of my personal wishlist features, like enabling customization of the image that takes the place of your stream when you are offline and fixing an edge case where the "get current viewers" API is missing viewer's auto-generated usernames until they send a chat message or change thier name.

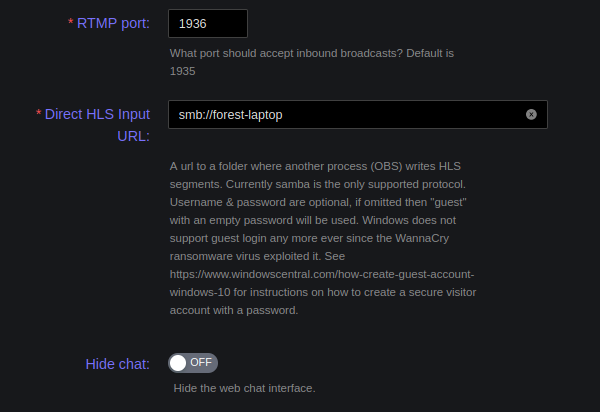

Of course, the most exciting part being the reason I went down this road in the first place, the new directHLSInput input type. This is what it looks like in the Owncast admin interface:

And here is the architechture diagram showing how it works:

Of course, this requires my workstation and owncast server to be on the same network (LAN). Otherwise, I would have to publish the file share to the internet somehow, either by exposing the samba port to the internet, serving the files over HTTP publically, or uploading them to an intermediate storage location like Backblaze.

Currently my directHLSInputURL feature only supports Samba (aka SMB, this is the protocol Windows uses for mounting network drives), but it could easily be expanded to other protocols. Leave a comment below if you would like to use this feature but need help setting it up 🙂

The other caveat: OBS currently only supports outputting 1 quality level at a time, so stream viewers won't be able to chose thier quality level while watching. However, for my use case I think this is fine, it reduces the amount of work my laptop has to do anyways.

However, I'm happy with the results I'm getting so far. The stream delay is massively improved, down to only 10 seconds from what felt like more like a minute with the old setup.

Also, my workstation CPU is a lot cooler, I don't get keyboard drop-outs while I'm typing any more and I think the stream quality is also improved even though the video bit rate is lower, since it's only being encoded once now instead of twice. (This is similar to how jpeg artifacts get worse and worse the more times a jpeg is opened and re-saved).

When it came time to deploy owncast to my server, I did run into some issues building a docker image that would work on ARMv7. First of all, I had to change the go build from being dynamically linked to being statically compiled because I would be building it on a different operating system / different processor architecture than the one it would eventually run on. (I was building it from my AMD64 linux workstation, and running it on my ARMv7 single board computer).

Owncast uses a two-stage docker build, one stage serving as the build environment and one stage serving as the runtime environment. The dynamic linking worked fine originally because both stages were based on the Alpine Linux docker image, & the build environment and runtime were using the same CPU architechture. However, I was not able to figure out how to get the cross-compilation (compiling a binary for the ARMv7 CPU architechture on a computer using the AMD64 CPU architechture) to work on Alpine Linux, so I had to change the first stage to use Debian as a build environment.

Normally, cross-compiling Golang programs is a breeze, because the go compiler supports it extremely well. However, many go programs pull in C/C++ code via thier dependencies, so the C/C++ code also has to be cross-compiled. Go allows us to do this, but it requires a working C/C++ cross compiler, which I was not able to figure out on alpine linux. I have tackled this problem before, however, when I was building a docker image for goatcounter, the ethical web analytics system I'm using. I based my work on the excellent blog post on statically compiling Go binaries by Martin 'arp242' Tournoij, the author of Goatcounter himeself. Owncast actually has the exact same quirks that goatcounter has, because they both pull in the C/C++ code for SQLite. So the -tags sqlite_omit_load_extension trick Martin talked about was just the thing!

So, finally at the end of the day I had a working docker build and working docker image for running Owncast on my ARMv7-based single board computer. If you wish to run it yourself on your own SBC, you could use a docker-compose like this:

version: "3.3"

services:

owncast:

image: sequentialread/owncast:0.0.7-beta8

restart: always

command: ["/app/owncast", "-enableVerboseLogging"]

volumes:

- type: bind

source: ./owncast/data

target: /app/data

Or if you would like to see the docker-compose file I use to host it myself, check out my forest/sequentialread-caddy-config repo.

Also, if you are using an ARM64, AMD64, or other type of server, leave a comment below and I can get you a build for your architecture. I was simply too lazy to implement a cross-platform build "in time for publication" 😛

Comments