Last week, I started streaming my programming work every day using cyberia's shared streaming service, stream.cyberia.club. They had been using open streaming platform in the past, but it was a bit clunky & difficult to maintain, so they tried out a different self-hosted streaming system, Owncast, instead. Owncast is straightforward and simplistic, it has no concept of user accounts or multiple different streams, it's just one stream per server instance with a relatively limited admin API.

However, it does one thing and does that one thing really well. It has some nice configuration options and supports live video transcoding, meaning that viewers will automatically receive different video bit-rates depending on their connection speed.

Anyways, I was having so much fun streaming every day I decided to set up my own instance of Owncast on sequentialread.com. Check it out!

--> stream.sequentialread.com <--

I encountered a bit of an interesting problem along the way. Owncast's transcoding feature is great for stream viewers, but it also means that the streaming server has to be able to handle the transcoding workload. If you're like me and you want to be able to use an ancient or very low power computer as a server, that's going to present a problem.

The laptop I'm using as a workstation is plenty powerful enough to record & transcode the video, but I wouldn't want to use it as a server. I can't keep it running all the time, it would be extremely impractical. So I got a little bit creative. You see, Owncast is an HTTP based application, meaning it's built out of requests and responses. By understanding how the application works, we can probably find a solution to this problem.

Understanding how the application works

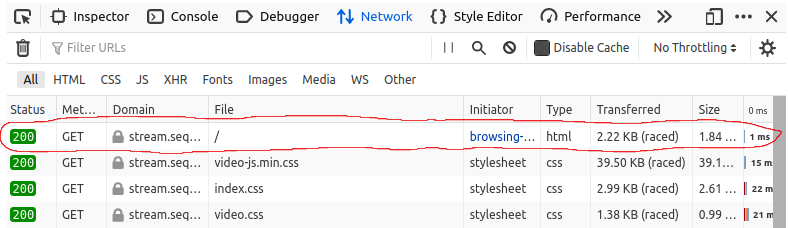

You can use your web browser to view the internal HTTP machinations of any HTTP based application (web application) that you come across by opening up the Network tab in your browser's developer tools. As an example, here's what it looks like in my browser when I visit my Owncast instance:

The circled request/response pair at / is the first, aka the "index" request. You can see that the type of response was html. So, this is the web server returning the layout of the page and list of additional resources that must be loaded to display this layout. Then the web browser proceeds to download each one of those additional resources, in this case, you can see it's downloading CSS (Cascading Style Sheet) files.

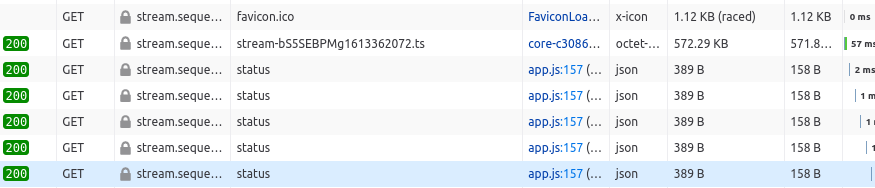

After the page loads and the web application is sitting idle for a a while, the bottom (most recent) requests in the network tab look like this:

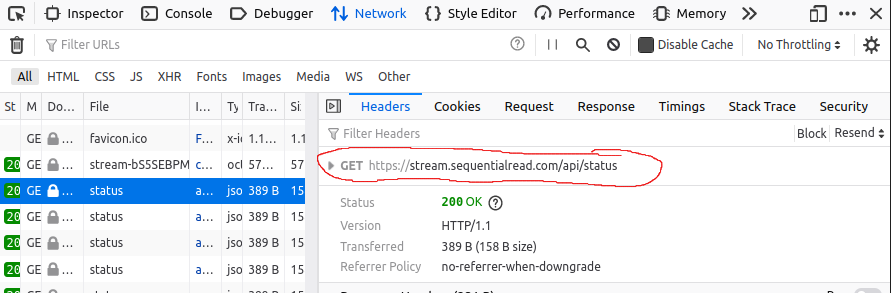

Here you can see that an entity called status is being requested every 10 seconds or so, with a response type of json (JavaScript Object Notation). If we click on one of these requests to drill into the details, we can see that the request path (the part of the URL after the domain name) is /api/status

So, presumably, this is the web application in your browser periodically asking the server for its current status (streaming or not streaming, how many viewers right now, etc). This is typical behavior for a real-time interactive application like a live video stream.

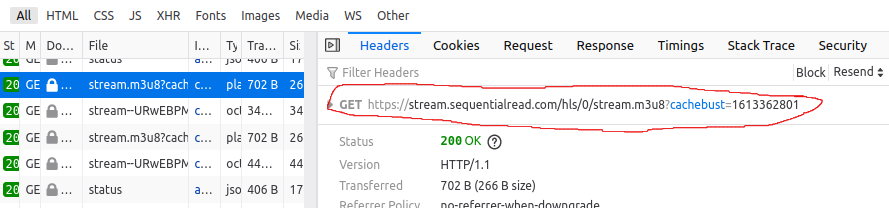

When I start streaming, I see something else in the network tab:

In between the /api/status requests, I'm also seeing multiple different kinds of requests like /hls/0/stream.m3u8?cachebust=1613362801 and /hls/0/stream--URwEBPMg1613362802.ts with response content types like application/octet-stream and text/plain. In this case, HLS stands for HTTP Live Streaming, the video streaming standard that Owncast uses. So, these are the chunks of video that the web application is downloading from the streaming server in real time.

In summary, we've identified three different types of content that come from the Owncast server.

- The web page and all of its code, layout, and content, like fonts, icons, and images.

- The JSON entities that are served under the

/apipath prefix. - The HLS video stream that is served under the

/hlspath prefix.

Using HTTP Caching w/ Separate Concerns

Here's the fun part. We just categorized the HTTP request/responses so that we can use an HTTP Reverse Proxy server (colloquially called a "Web Server") to slightly alter the way each category functions in order to solve our problem.

You see, the response content in category 1 never changes. It's the same every time someone visits the page. Only categories 2 and 3 contain dynamic content. This separation of static from dynamic content is a common design pattern in modern web applications, and it offers a lot of benefits. In our case, it benefits us because it lines up with our two concerns:

- We want the server to be on all the time.

- We want the Owncast application to run on our laptop workstation where it has a fast & modern CPU to encode video in real time.

Concern B, video encoding, is related to the dynamic content (2 and 3). And Concern A, server being on all the time, is related to the static content (1).

How's that?

Well, if you recall, before I started the stream, the page displayed just fine. It said "stream is offline" and the chat window was empty, but that's totally normal behaviour if no one is streaming right now. All the code was doing was polling the /api/status endpoint every 10 seconds, and because the stream was offline, that endpoint wasn't returning anything interesting. When the stream wasn't running, it was almost as if it didn't matter whether the server was there at all, as long as the static content could be downloaded.

Do you see where this is going? 😉 I can host owncast on my laptop, which won't be on all the time, as long as my web server which IS on all the time can serve the static content.

Here's the trick. A web server can cache any given request/response pair for later, and if it sees the same request again, it can simply return the response it cached previously. I use nginx (pronounced engine-x) as a web server to host my stuff, so all I had to do was configure nginx to do 4 things:

- When it gets a request for stream.sequentialread.com, it forwards that request to my laptop.

- When it gets a request for stream.sequentialread.com, it categorizes the request as either static or dynamic.

- When it gets a response from the laptop, if the request was for a static resource, it caches the response indefinitely.

- When it tries to contact the laptop but gets *no response, it will simply give up & return the cached content.

So when I want to stream, all I have to do is launch the Owncast application on my workstation & start streaming with OBS (Open Broadcaster Software). As soon as the laptop starts serving out files, the nginx web server will start serving them out as well. Finally, when I stop the stream and shut down the Owncast process on my laptop, nginx will continue to serve the cached static resources for the stream indefinitely, and the stream will appear to be down until I start it up again.

This implementation seemed to work ok on the first try, except for one little wart. Owncast's separation of concerns is not 100% pristine, there is one HTTP request /api/config which appears to contain dynamic content, but it's actually static content. So my nginx wasn't caching it at first, resulting in the title & description of the stream appearing blank when I turned the laptop off. However, this was an easy fix; I just told nginx to cache that specific request.

It's important to note that we have to categorize the responses, we can't just cache all of them. If I tried to cache every single video chunk response on my web server, it would cause unnecessary stress to the server & fill up the disk rapidly.

EDIT: After I published this, I discovered that when the laptop running owncast was fully powered off, nginx would take a lot longer to time out before it could fall back to the cached version. This was exacerbated by the way that owncast's javascript app loads one file at a time. Either because I'm not an nginx expert, or maybe because the version I was using was too old, I was never able to completely fix this problem.

I actually ended up writing my own little app dedicated to caching owncast's static files. It does the same thing I described in this article, but it also actively monitors the status of owncast and skips directly to the cache if it already knows that owncast is not running. I published it as a platform-agnostic docker container on Docker Hub as sequentialread/owncast-caching-proxy.

If you are curious, I published all the source code here: https://git.sequentialread.com/forest/sequentialread-stream/src/master/cachingproxy

Comments